The domain of evaluation

“Evaluation is the process of determining the adequacy of instruction and learning”(Seels & Richey, 1994, p.54).

Evaluation, like many of the other domains and sub-domains of instructional technology, is a field itself with an industry of professionals, journals, and organizations. It grew out of the need for a more systematic and scientific procedure of this commonplace human activity.(Seels & Richey, 1994). Evaluation is the implied (but very real) third step of the 'trial and error' process.

The purpose of educational evaluation differs from the purpose of other traditional educational research. Namely, the purpose of educational evaluation is to support the making of sound value judgments and decisions, not to test hypotheses or advance generalized theory.

The evaluation domain is organized into five categories: problem analysis, criterion-referenced measurement, formative, summative and confirmative evaluation.

Problem analysis

"Problem analysis involves determining the nature and parameters of the problem by using information-gathering and decision-making strategies"(Seels & Richey, 1994, p.56). This definition firmly ties the evaluation domain with the design domain. Both seek to identify needs, constraints, resources, goals (and whether or not these goals can be met by instructional means), and priorities.

Allison Rossett’s Training Needs Assessment model (1987) is used as a practical framework and process guidebook in the conducting of needs analysis. In this book Rossett details techniques and tools for gathering information in order to define the problem and identify the gap between optimal and actual levels of performance. She focuses on determining five types of information: actual, optimal, feelings, causes and solutions.

Criterion-referenced measurement

"Criterion referenced measurements involves techniques for determining learn or mastery of pre-specified content" (Seels & Richey, 1994, p. 56). This type of measurement, often contrasted to Norm-referenced measurement, Judges student mastery in reference to the objectives or the criteria. for example, in a learning module where the objective was for the students to learn 80% of the US state capitals, a criterion reference measurement would give a passing grade to all students who scored 80% or higher. The judgment is against the objective (criteria), and not a representative sample (the norm).

Formative evaluation

"Formative evaluation involves gathering information on adequacy and using this information as a basis for further development" (Seels & Richey, 1994, p.57)

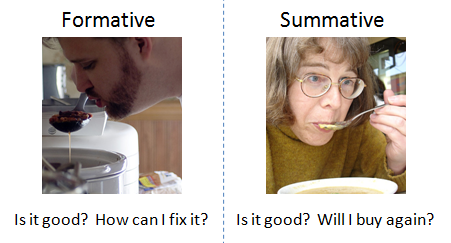

Evaluation is used by the designer to to answer the question "Is this instruction working?" In contrast to summative, formative evaluation is conducted during design and development. Its purpose is to gather insights and data on how best to revise the instruction.

Instructional designer during this phase conducts one-on-one and small group tests. These tests can assess student performance on the learning objectives, as well as the instructional material itself ( logical sequence, understandable instructions, content accuracy, and quality)."Formative evaluation provides feedback is used for improvement of the products at all stages of the ISD process"(Seels & Glasgow, 1998, p.146)

The difference between formative and summative evaluation is summed up in a quote attributed to educational researcher Robert Stakes: "When the cook tastes the soup, that’s formative; when the guests taste the soup, that’s summative."

Summative evaluation

"Summative evaluation involves gathering information on adequacy and using this information to make decisions about utilization"(Seels & Richey, 1994, p.57)

Like formative evaluation, summative seeks to answer the question "is this working". Using criterion referenced tests and other means the designer evaluates if learning objectives are being met. However unlike formative assessment the purpose of these violations are to make a decision about the assessment. It is conducted after the completion of the design and development process once the instruction is fully implemented. It is also conducted for an external audience, such as funding agencies or future possible users.(Scriven 1967)

Instructional designer's activity differ for summative and formative evaluation. Unlike formative violation ( which can be informal), summative evaluations require formal procedures and methods of empirical research.also, to ensure objectivity, and unbiased external evaluator unaffiliated with the design development and implementation team is used to conduct this phase. (Seels & Richey, 1994)

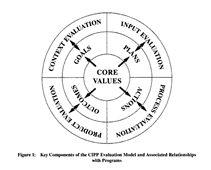

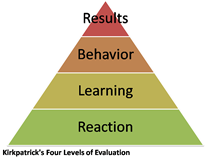

The theoretical foundation of the domain of the evaluation is supported by two evaluation models, CIPP (Context, Inputs, Processes and Products) and Kirkpatrick’s Four-Level Model (re-action, learning, behavior, results) (2006).

Kirkpatrick's four level model distinguishes four levels or objectives for the evaluation. Reaction is the first, easiest to do, and should be measured for every program. it measures the learners reaction to the instruction and can be reliably measured by a simple, short survey. The other three levels should be measured as staff time and money are available (Kirkpatrick and Kirkpatrick, 2006).